Agency looks to develop guidance for human-in-the-loop processes

AI minds from NIST (National Institute of Standards and Technology) have called for organizations to take a ‘socio-technical’ approach to mitigate bias in AI.

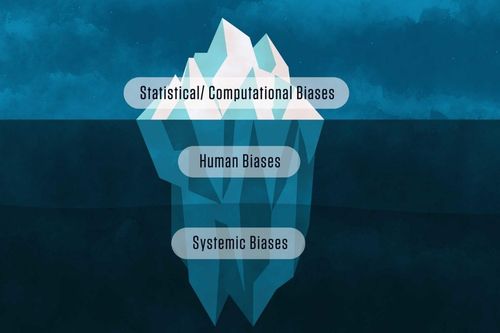

In a newly released publication on standards for managing biases in AI, the authors suggest brands should recognize that AI operates in a larger social context — and that purely technically based efforts to solve the problem of bias will come up short.

The document suggests that companies should create new norms around how AI is built and deployed to manage risks from biases.

By taking a socio-technical approach to AI, companies would have to account for values and behaviors modeled from the datasets they’re using, as well as the humans who interact with them and the organizational factors that go into their commission, design, development and ultimate deployment.

“Understanding AI as a socio-technical system acknowledges that the processes used to develop technology are more than their mathematical and computational constructs,” the paper reads.

“A socio-technical approach to AI takes into account the values and behavior modeled from the datasets, the humans who interact with them, and the complex organizational factors that go into their commission, design, development, and ultimate deployment.”

NIST author Reva Schwartz suggested socio-technical approaches in AI are an emerging area as organizations “often default to overly technical solutions for AI bias issues.”

“But these approaches do not adequately capture the societal impact of AI systems,” she said, adding, “The expansion of AI into many aspects of public life requires extending our view to consider AI within the larger social system in which it operates.”

NIST authors conclude its paper by saying it plans to develop formal guidance about how to implement human-in-the-loop processes that don’t perpetuate biases. The organization is set to run a series of public workshops aimed at drafting a technical report for addressing AI bias.

Image: N. Hanacek/NIST

Written by Ben Wodecki and republished with permission from AI Business.